One of the main applications for Rust is the Servo Parallel Browser Project. This means Rust must fundamentally be a parallel language. Indeed, the language already has Erlang-inspired lightweight tasks, and communication using ports and channels. Unfortunately, most of the code that uses these features are tiny microbenchmarks. While these are useful for making sure the low-level mechanics are efficient, they do not provide as much insight into how Rust feels and performs overall as a parallel language. To this end, I decided to begin my summer internship by implementing most of the Graph500 Breadth First Search Benchmark.

I started out by writing a purely sequential version of the benchmark that handled generating the edge list, building a graph structure from the edge list, generating a BFS tree for several randomly chosen keys, and finally doing some validation to give us some idea that the algorithm might be correct. For my graph data structure, I used an adjacency list; each vertex keeps a list of all the vertices it is connected to. I used the basic search algorithm, which you can find on the Wikipedia page for breadth first search.

The initial results seemed fast to me (although I’m not a graph performance expert, so I don’t know how my results compare to other implementations). Once I got to validation, however, things got slow. The algorithm I used for Step 5 of the validation (ensuring that edges in the BFS tree exist in the original graph) is O(N * E), where N is the number of vertices and E is the number of edges in the graph. While the right thing to do would have been to find a more efficient algorithm, I instead decided to throw some parallelism at the validation code instead.

The outer loop of the code in question is just mapping a function over a vector, so I wrote a parallel map. The simplest way is to simply spawn a task for each element in the input vector, but this could lead to the task scheduling overhead destroying any speedup you might get from parallelism. Instead, my parallel map function is controlled by a maximum number of tasks to spawn, and a minimum number of items to process per task. This leads towards coarser granularity for parallelism, which seems like a better fit for multiprocessors.

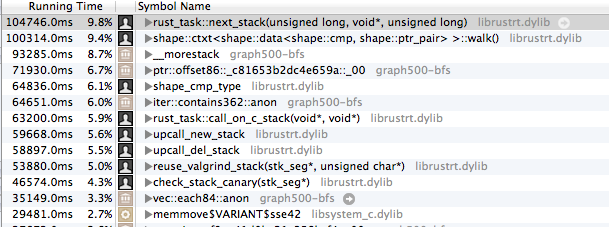

Sadly, once all this was done, I only had about a 1.4x speedup on my quad-core hyperthreaded machine. This was basically an embarrassingly parallel problem, so I expected a speedup in the 4-8x range. Patrick Walton suggested I look at this using Instruments.app, and here’s what we found:

Around 40% of our time was spent in stack-growth related functions. The first step, then, was to use a larger minimum stack size. Once I ran the test using a 2MB stack size, the stack growth function disappeared from the profile and I was able to get about a 3.4x speedup over using the sequential map. This seemed reasonable to me, so I moved on to parallelizing the actual search algorithm.

Again, not being a graph processing expert, I wasn’t sure what algorithm to use. Most of my web searching led to more complicated algorithms that are highly tuned for certain architectures. I was looking for something simple. Eventually, I found this page which helped clarify things and inspired the approach I ended up taking. My approach involved keeping a color vector, where each vertex is colored either white, gray or black. White nodes are those that we haven’t seen before, gray are seen but not yet explored, and black nodes are the ones that are finished. We start by setting the root of the search to gray, and then keep producing a new color vector until all of the gray nodes have disappeared. In psuedocode, the algorithm works like this:

Initialize color vector

While there are gray nodes

For each vertex v

If v is white then

If any neighbors are gray then

Turn gray

Else

Stay white

Else if v is gray then

Turn black

Else if v is black then

Stay black

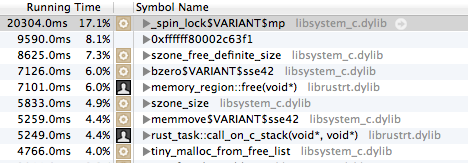

Since none of the vertices depend on each other, we can easily run the per-vertex loop in parallel. Unfortunately, doing this led to a 50-100x slowdown. Clearly this is not what we wanted! To try to figure out what went wrong, I once again turned to Instruments, and saw this:

Here we see that we’re spending an absurd amount of time in a spinlock. Drilling down a little further shows that this seems to have to do with allocating and freeing in Rust’s exchange heap. This is likely due to the way Rust restricts sharing between tasks. Each of the parallel blocks needs to access the graph edge list, which Rust must copy into each task. Since this is a fairly large vector of vectors, and vectors are allocated in the exchange heap, this leads to a lot of contention.

So, what next? One approach would be to rewrite the parallel BFS algorithm to avoid these pitfalls. Using a more traditional distributed memory approach, with longer running tasks that are each responsible for a portion of the color vector, could avoid some of these problems. Ideally we could use the code as written and realize that since the edge lists are immutable, Rust can simply share them between all tasks. Niko has some ideas along these lines.

Besides these parallel performance opportunities, there are a few other places where Rust could improve. I had to write my own queue because the standard library’s deque is unusable due to a compiler bug. This bug should be fixed soon though. This bug also meant I had to include my new parallel module in my benchmark code rather than adding it to the standard library. Second, even in the more optimized versions of the validation code, we end up spending a lot of time in compare glue. The reason is that the edge list is a vector of tuples of two uints, and we spend most of our time testing for membership. For simple scalar types, these comparisons are very fast. For tuples, however, Rust falls back on a slower shape interpreter. Ideally, for simple tuples, Rust or LLVM would generate optimized comparison code.

Overall, this has been a fun experience, and has given a lot of opportunities to improve Rust!

The code for this benchmark is currently available on a branch in my Rust fork.